You can add a connection to the HDFS file system using ThoughtSpot DataFlow.

Follow these steps:

-

Click Connections in the top navigation bar.

-

In the Connections interface, click Add connection in the top right corner.

-

In the Create Connection interface, select the Connection type.

-

After you select the Connection type, the rest of the connection properties appear.

Depending on your choice of authentication mechanism, you may use different properties. <!–

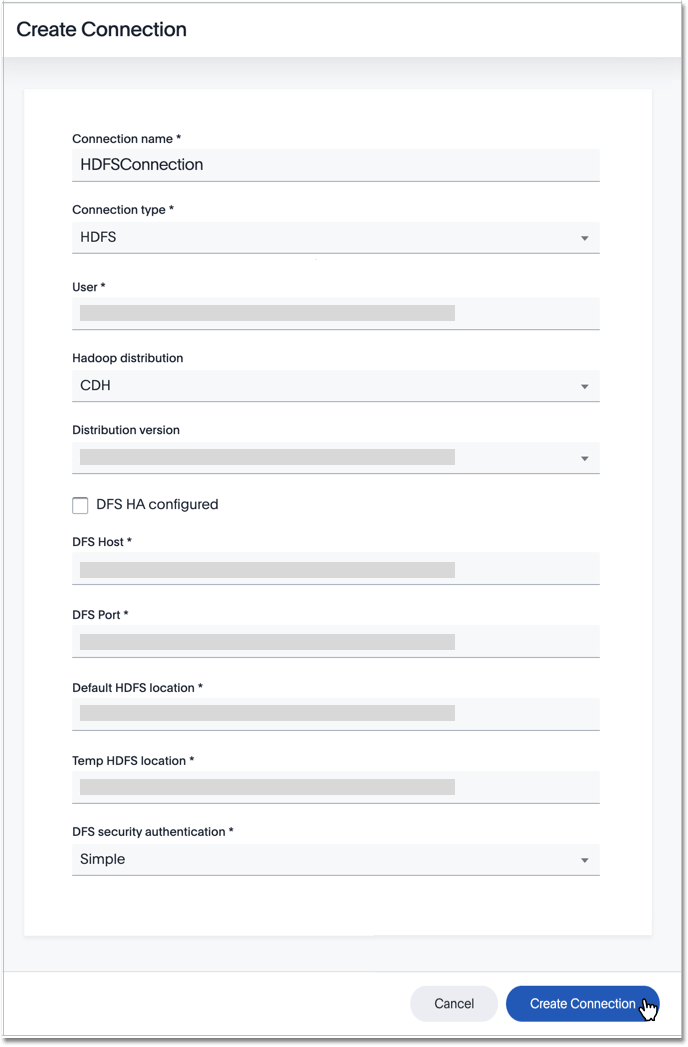

See the Create connection screen for HDFS

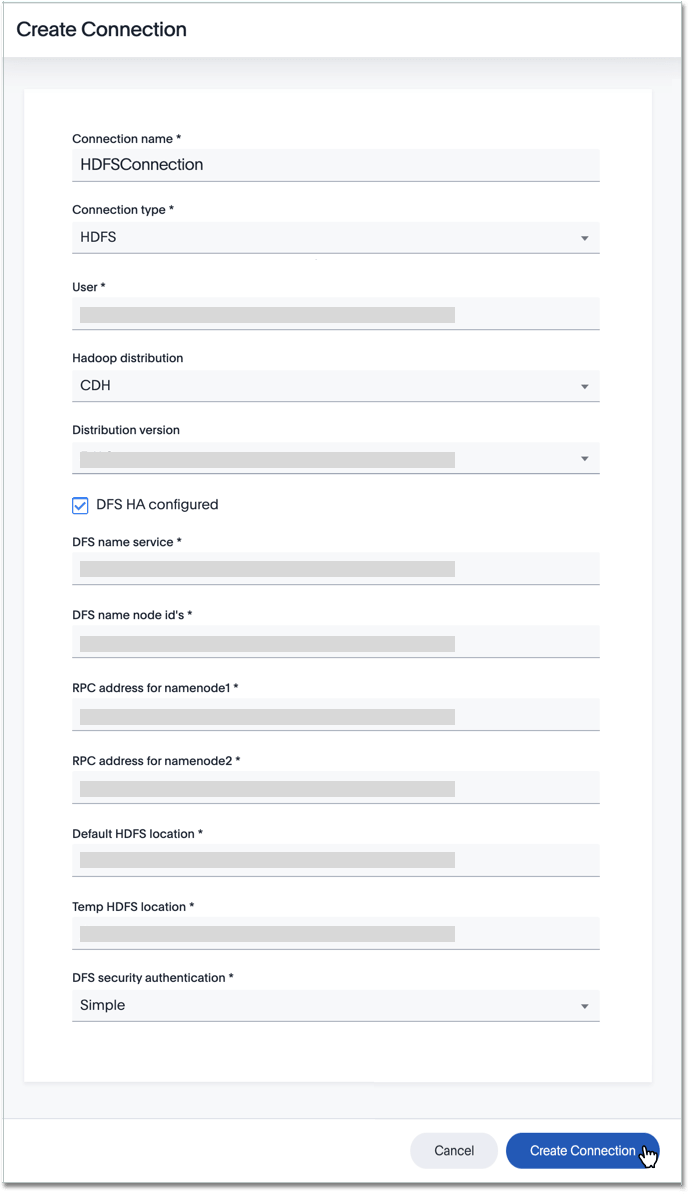

See the Create connection screen for HDFS with DFS-HA enabled

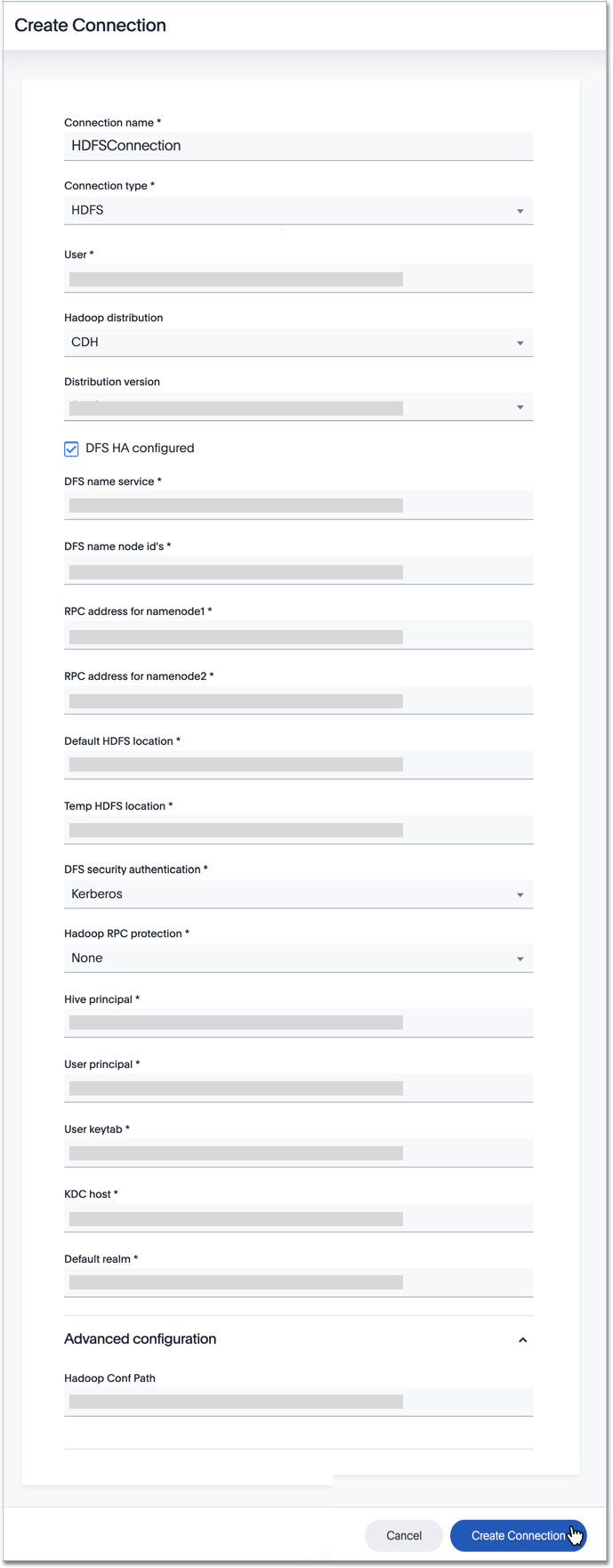

See the Create connection screen for HDFS with DFS-HA enabled, and Kerberos authentication

–>

- Connection name

Name your connection. - Connection type

Choose the Google BigQuery connection type. - User

Specify the user to connect to HDFS file system. This user must have data access privileges. For Hive security with simple, LDAP, and SSL authentication only. - Hadoop distribution

Provide the distribution of Hadoop being connected to

Mandatory field. - Distribution version

Provide the version of the Distribution chosen above

Mandatory field. - Hadoop conf path

By default, the system picks the Hadoop configuration files from the HDFS. To override, specify an alternate location. Applies only when using configuration settings that are different from global Hadoop instance settings. - HDFS HA configured

Enables High Availability for HDFS

Optional field. - HDFS name service

The logical name of given to HDFS nameservice.

Mandatory field. For HDFS HA only. - HDFS name node IDs

Provides the list of NameNode IDs separted by comma and DataNodes use this property to determine all the NameNodes in the cluster. XML property name isdfs.ha.namenodes.dfs.nameservices. For HDFS HA only. - RPC address for namenode1

To specify the fully-qualified RPC address for each listed NameNode and defined asdfs.namenodes.rpc-address.dfs.nameservices.name_node_ID_1>. For HDFS HA only. - RPC address for namenode2

To specify the fully-qualified RPC address for each listed NameNode and defined asdfs.namenode.rpc-address.dfs.nameservices.name_node_ID_2. For HDFS HA only. - DFS host

Specify the DFS hostname or the IP address

Mandatory field. For when not using HDFS HA. - DFS port

Speciffy the associated DFS port

Mandatory field. For when not using HDFS HA. - Default HDFS location

Specify the location for the default source/target location

Mandatory field. - Temp HDFS location

Specify the location for creating temp directory

Mandatory field. - HDFS security authentication

Select the type of security being enabled

Mandatory field. - Hadoop RPC protection

Hadoop cluster administrators control the quality of protection using the configuration parameter hadoop.rpc.protection

Mandatory field. For DFS security authentication with Kerberos only. - Hive principal

Principal for authenticating hive services

Mandatory field. - User principal

To authenticate via a key-tab you must have supporting key-tab file which is generated by Kerberos Admin and also requires the user principal associated with Key-tab ( Configured while enabling Kerberos)

Mandatory field. - User keytab

To authenticate via a key-tab you must have supporting key-tab file which is generated by Kerberos Admin and also requires the user principal associated with Key-tab ( Configured while enabling Kerberos)

Mandatory field. - KDC host

Specify KDC Host Name where as KDC (Kerberos Key Distribution Center) is a service than runs on a domain controller server role (Configured from Kerbores configuration-/etc/krb5.conf )

Mandatory field. - Default realm

A Kerberos realm is the domain over which a Kerberos authentication server has the authority to authenticate a user, host or service (Configured from Kerbores configuration-/etc/krb5.conf )

Mandatory field.

See Connection properties for details, defaults, and examples.

- Connection name

-

Click Create connection.